Not So Dirty Deeds Done Dirt Cheap

Desk work disruption, a framework for AI job security, and Service-as-a-Software

To be a desk worker in the era of AI is to be aware of one’s own disruption. The advent of LLMs has wrought a karmic 180° in the conversation around automation, shifting the spotlight from primarily blue-collar roles in industries like logistics and manufacturing to the white-collar roles of people who occasionally conjecture about blue-collar work and meaning online.

As an office worker myself, I’ve watched with intrigue as new generations of models have shattered benchmarks on the standardized tests and licensing exams at the bedrock of white-collar qualification. Yet, I’ve also found myself at odds with much of the commentary around the timing, nature, and level of immediate disruption facing white-collar work.

And as an investor looking at AI-enabled companies, I spend a lot of time thinking about that disruption and where it’s likely to play out. Lately I’ve treated the question as an inversion exercise: identifying which professions or industries are unlikely to see displacement.

How should we think about job security in the age of AI?

Pack Up Your Desks

When ChatGPT burst onto the scene in 2022, things got weird. There was a pervasive sense that the genie had finally left the bottle. Engaging with ChatGPT and then later models, it became clear that LLMs were uniquely good at a few core things that prior software stumbled with:

Analyzing – digesting large amounts of multi-modal information to retrieve answers

Summarizing – comprehending patterns and presenting information in new formats

Acting on instruction – taking flexible orders, defining context, and using that context to act non-deterministically

White-collar work is filled with these things. It’s no wonder we blitzed past the Turing Test in favor of the Bar, MCAT, or GMAT. While there is debate on the soundness of qualifications and benchmark testing, we have ample reason to speculate on when the desk packing begins.

But Which Desks?

The noise around which jobs are at risk of automation is overwhelming. I see many arguments that involve some sort of overgeneralization (e.g. “AI will replace lawyers”) and assume a one-size-fits-all approach to a profession holds water, or a sort of false equivalence (e.g. “AI passed the Bar exam so could perform the job of a lawyer”) that suggests that meeting one benchmark translates to performing the totality of the job well.

So what does it mean for an AI agent to be able to perform a job at all? If we’re focused on true displacement I think it’s in the vein of: an autonomous actor capable of executing all of the generally accepted tasks of a role with accuracy and output at or above the median person.

A Framework for AI Job Security

Defining displacement helps to zero in on points of failure. In then determining which jobs are furthest from the chopping block, I’ve focused on the difficulties agentic systems are likely to encounter in the workplace.

Task Variance. Jobs with high task variance display a wide range of different functions and are characterized by nonroutine nature. Jobs with limited task variance are extremely repetitive. Agentic systems are tuned towards specific tasks. This often means thinking about the edge cases where things might go off the rails and how to repeatably guarantee a system accomplishes the goals at hand. Low task variance is great for agents. Task variance can add orders of magnitude more complexity, increasing surface area for failure.

Skill Variance. Think of skill variance as how specialized a function is, where higher specialization creates greater odds for outlier performers. In the workplace, I argue that high skill variance benefits agents. Many jobs have performance curves that follow more of a power-law distribution than a bell curve. This rightward skew makes for low medians and high means. Outlier skill is typically born of the things agents are great at: active practice against a massive amount of data and unlimited reps. It’s easier for agentic systems to beat the median in these roles – to surpass what we think of as entry-level performance.

Regulation. Capability to perform a job won’t be limited by performance, but by regulation in certain professions. AI lawyers, doctors, and financial professionals will face increasing regulatory scrutiny due to strict licensing processes and strong industry associations. Unions will play this role for other work (see Hollywood for a recent example). I think the coming years will see an intermediary phase of intense pressure for regulation by industry groups that hampers rollout of agentic models. Low regulation benefits agents.

Criticality. LLM-based systems aren’t yet mixing well with mission-critical work. It’s the flip-side of the non-deterministic coin. The creativity and adaptability agentic systems are prized for can make it devilishly difficult to turn them loose on high-stakes tasks. Lower criticality workloads benefit agents.

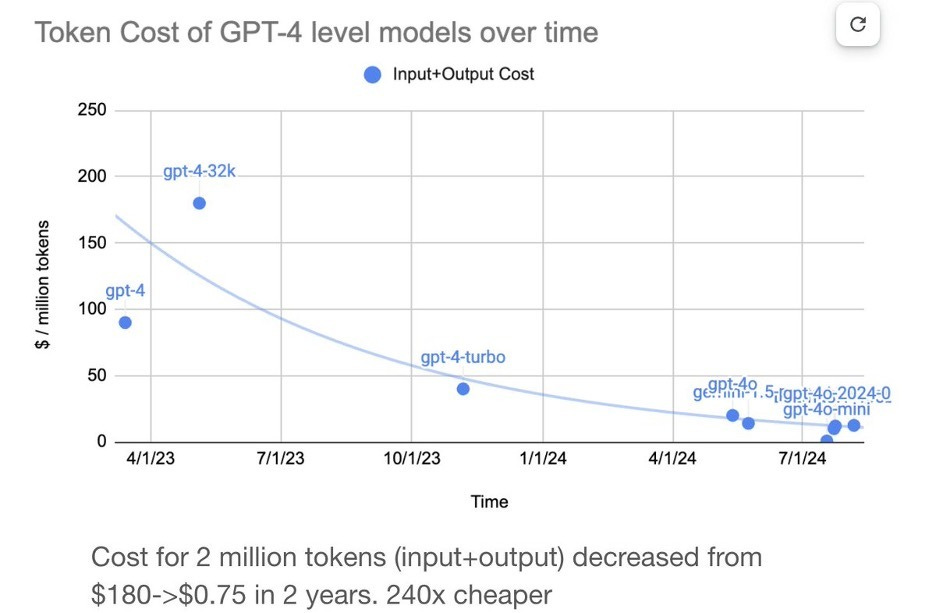

Cost. It’s worth looking at cost on two levels: the cost at the job level and the cost at the organization level. Agentic models should address serious cost-centers. Many professions have high base costs of labor or otherwise enough volume of a lower cost job function to burden them at the org level. Cost needs to justify investment.

Training Data. Perhaps the most self-explanatory, agentic approaches thrive on training data for specific use cases and outcomes. Case and contract data in law, code repositories in software engineering, patient data in medical diagnostics. If a profession has limited “online” data or data that can be brought online, the predictive superpowers of LLMs fall short.

Disinversion Time

I started to write this after going very deep on Sierra – a company founded by Bret Taylor to replace customer support agents in consumer-facing roles. He has been on a bit of an interview spree of late, so there’s tons of material linked in “The Book” below to go deeper.

Sierra is a fascinating case study of the above framework. In eCommerce customer support, their initial market, you have limited task variance, limited regulation, low criticality, medium cost (more volume-driven that equals 5-10% of revenue not factoring in support-adjacent issues like returns), and lots of training data in the form of tickets. Not a bad showing. The only major variable it somewhat misses on is specialization.

Harvey and EvenUp, two other heavily-funded startups, have their eyes on law – just not the lawyers. High task variance, regulation, and criticality make the work of most lawyers a tricky proposition for automation.

What about the work of a paralegal?

Lawyers depend on paralegals for a lot of their lower criticality and repetitive work and paralegals lack unions and strong industry associations. Harvey focuses on use cases like contract drafting, redlining, case summaries, and other administrative prep in corporate law. EvenUp tackles demand letter generation and aspects of the negotiation workflow in personal injury law.

If you read early customer feedback, you’ll notice some of the common frustrations with early agentic systems and non-deterministic output, but you’ll also see both products sometimes described as “at paralegal” or “first-year associate” level.

In looking for other professions with similar profiles, I think we’ll see heavy focus on the cogs in the machinery of higher cost services workflows – healthcare admin, insurance claims, IT, web development, and brokers or intermediaries in a lot of financial services.

Service-as-a-Software?

It’s clear why many early builders are focused on copilot approaches as opposed to the work-disrupting persona agents that we’ve been promised. Displacement is hard. Most roles have cost motivations and some sort of data to refine against, but very few demonstrate the right combinations of tasks, skill, regulation, and criticality to present enticing targets for immediate automation.

Copilots avoid thorny task and regulatory issues by empowering skilled workers rather than supplanting them. Most startups bringing vertical AI applications to market currently fall in this camp. If you can’t beat them, join and improve them. By enmeshing in early data and workflows, this approach positions a challenger well to disrupt entry-level functions as models and methods improve near-term, and, ultimately, to reap the massive rewards of performing critical, regulated job functions long-term.

Another route is to target the organization level. I suspect we’ll see more and more new entrants take this approach: instead of competing to build the agentic lawyer, build the agentic firm.

Agentic firms are built ground-up with the latest and greatest tech – they use available copilots, experiment with the new models, and spend a lot of effort understanding the “glue” of a services firm. How do the roles and workflows fit together? Which hand-off’s are most error-prone and where is there low-hanging fruit for automation?

I suspect agentic firms helmed by industry insiders will play a key role in breaking regulatory barriers across professions. It’s easy to focus on fortifying defenses when the threat lies outside. It’s another thing to defend against motivated challengers from within your own walls.

Ultimately, for the vision to succeed – for us to achieve autonomous services experiences that feel indistinguishable from software – we need both. Copilots help us understand tasks with depth and agentic firm approaches help us de-risk a service’s breadth. They each address failure points in the “persona agent” path.

On Jevons and Jobs

In the discourse on AI-mediated job displacement, I see arguments for panic nearly as often as optimism. AI abundance or necessary UBI?

The case for panic typically revolves around 1) sudden AGI 2) the relative speed of AI-mediated disruption versus prior platform shifts or 3) the relative breadth and depth.

I leave the date of official AGI to OpenAI’s lawyers. The other causes for panic are worth discussing.

It took upwards of five decades to phase out manual switch operators. How long will it take AI to displace knowledge workers? There’s some degree of consensus that it’ll be much faster, especially given the fundamentally software-based nature of the shift. However, I think we serially underrate how time and capital-intensive the necessary hardware buildout to support AI will be.

As to the breadth and depth aspect, this entire piece hopefully illustrates some of the structural difficulty in replacing work and workers with autonomous systems today. It may not be a matter of “if”, but the “when” is thorny.

The AI optimists remind us of Jevons. William Jevons coined his namesake paradox to describe how as systems use a resource more efficiently, demand for the resource typically rises in a supralinear fashion. Electricity generation becomes more efficient -> electricity consumption increases more than the relative gain in efficiency. They reason the same will likely be true of AI compute.

The crux of the Jevons Paradox playing out in AI is a positive demand shock predominating against a messy scaling of supply. I.e. if agentic frameworks reduce the cost of services like legal review and the relative increase in demand is more than our efforts at automation can absorb…. Well, we need more knowledge workers.

We also likely need new categories of jobs to support new modes of automation and the entirely new pools of behavior that emerge from cheap agentic scaling. Is it a $4.6T opportunity, a $10T opportunity, or something else entirely? If history is a lesson, we’re probably forecasting poorly.

“The Book”

Recommended content for going deeper on Service-as-a-Software or figuring out when you’re likely to lose your own desk job: